Welcome to Touchable TV!

In addition to showcasing their 8K, 7680×4320, Ultra-High-Def (Ridiculous-Def?) TV broadcasting kit last weekend, Japan’s NHK also demoed a haptic feedback device that simulates virtual 3D objects in real time. And the thing is, it’s really just a robot that, when you touch it, kinda touches you back.

NHK (Nippon Hōsō Kyōkai/Japan Broadcasting Corporation) is a public media organization somewhat analogous to the American PBS. However, entirely not at all like its American counterpart, the J-broadcaster’s got this: NHK Science & Technology Research Laboratories. Which is nice, because in cooperation with various corporate partners, NHK seriously delivers the tech.

Okay fine… so where’s the robot?

Haptic Virtual Reality that’s Actually Virtual – Just Put Your Finger in This Robotic Thingy!

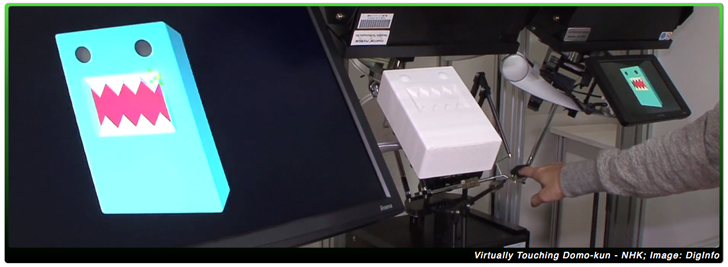

In the image above, a brave test pilot is placing his index finger into the locus of a five-point artificial haptic feedback environment. Based on the analysis & modeling of a virtual 3D object that in turn informs the movements and relative resistances among five robotic arms controlling the five feedback points, a focused area of stimuli/response is generated. Sounds complicated to explain “robotic, artificial sense of touch” that way, but conceptually the idea is quite simple:

#1. Put your finger in here and strap on the velcro:

#2. It’ll feel like you’re touching something that doesn’t physically exist, like Domo-kun (Dōmo-koon) here:

Each of those shiny round points is the terminus of a robotic arm that either gives way or holds steady based on the relative position of the finger to the contours of the object being simulated. Each point’s position-resistance refreshes every 1/1000th of a second. Not bad.

For practical, full-immersion VR to exist (in a physical sense; that is, before VR becomes a direct neural interface a la The Matrix), for now and for a while our low-to-medium-resolution interactive haptic feedback interfaces will be intrinsically robotic. And for virtualizing entirely digital, non-real artifacts, NHK’s device is a step in that direction.

Of course five points of interactivity might not sound like much, but mindful of the generally leapfroggy nature of technological advancement, effectively replicating and surpassing the haptic resolution we now experience via the estimated 2,500 nerve receptors/cm² in the human hand doesn’t seem too tall an order.

If that does seem too tall, if that does sound too far out and overly optimistic, if it seems impossible that we’d ever be able to cram 2,500 sensory & feedback robots into a square centimeter – well, then your robo-dorkery score is low and you need to pay more attention. Because dude, we’re already building nanorobots atom-by-atom. Not an “if” question, this one.

Neat… But Anything Really New Here?

Of course, a wide variety of teleoperated force-feedback systems are either already in use or in-development (the da Vinci Surgical System; NASA’s Robonaut 2; etc.), so it’s important to emphasize here that NHK’s device is novel for a very particular reason: Maybe all, or nearly all, of the force-feedback haptic systems currently in use or development are based on an ultimately analog physicality. That is to say, whether it’s repairing a heart valve from another room, or, from a NASA building in Texas, tele-pushing a big shiny button on the International Space Station – what’s being touched from afar ultimately is a physical object.

So, what we might consider contemporary practical VR is more accurately a kind of partial VR. As the sense of touch is essential to our experience as human beings, incorporating that sense is a step toward interactive, actual factual, truly virtual virtual reality. Modeling and providing haptic feedback for non-physical objects, i.e., things that don’t really exist, in concert with other virtualization technologies – that’s a big step.

So What Can/Does/Will it Do?

NHK is kind of talking up the benefits for the visually impaired – which is good and noble and whatnot – but perhaps focusing on that is a bit of a PR move, because at least in theory this technology could go way, way beyond simple sensory replacement/enhancement.

An advanced version, incorporating the virtual touching of both simulated and/or real objects, could add layers of utility and interactivity to almost any form of work, entertainment, shopping… from afar we might discern how hard it is to turn a valve in an accident zone (partial VR), how bed sheets of various thread count feel against the skin (partial or full VR), the rough surface of the wall one hides behind in a videogame (proper VR), or even pettting the dog, or petting… ummm, a friend (partial and/or proper VR – chose your own adventure)!

That’s a ways off, but in the short-to-near-term, here’s how NHK envisions functionality for their touchable TV tech:

Matchmaker, Matchmaker, Make Me a Full-Immersion Omni-Sensory VR System!

Okay, so to get this ball rolling: NHK, meet VR upstart Oculus Rift. NHK & Oculus Rift, meet VR/AR mashup Eidos. NHK, Oculus Rift, and Eidos, meet UC Berkely’s laser-activated pseudo-robotic hydrogels.

We’re all waiting for your pre-holodeck lovechild.

• • •

Reno J. Tibke is the founder and operator of Anthrobotic.com and a contributor at the non-profit Robohub.org.

Via: MyNavi (Japanese/日本語); DigInfo

Images: DigInfo; NHK